New

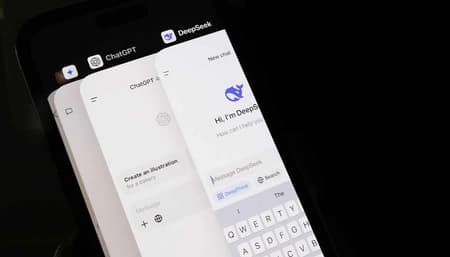

NewWhy US platforms are turning to Chinese AI models

Chinese open source AI like DeepSeek and Qwen is winning US clients by mixing solid performance with flexibility and lower operating costs.

New

NewChinese open source AI like DeepSeek and Qwen is winning US clients by mixing solid performance with flexibility and lower operating costs.

The mHC idea lets DeepSeek train 3–27B models more efficiently, supporting its push to rival US labs while spending only a fraction on hardware.

Chinese coding models now sit close to Claude tier performance, while Doubao and Yuanbao prove domestic AI platforms can win huge mainstream audiences.

AI could take over most jobs in 10–20 years, warns DeepSeek’s researcher, urging tech firms to protect society amid rising risks and disruption.

DeepSeek’s R1 cost ~$294K to train with 512 H800 GPUs and 80h. Inference costs are ~$0.55 input / $2.19 output per million tokens.

DeepSeek-V3 majorly upgrades from V2 with a massive 236B parameter count, a vastly larger 128K context window, and superior performance, all offered for free.

DeepSeek's Nano-VLLM cuts VLLM's memory by 5x using dynamic KV cache, runs on CPU via NumPy, and adds speculative prefill. Targets edge AI devs.

DeepSeek's R1 is a lightweight AI model that runs on a single GPU.