Qwen 3.5 is Alibaba’s new flagship family of open and hosted models aimed at “native multimodal agents” that can reason over text, images, and long videos while also acting as autonomous agents on phones and PCs. The main open-weight version, Qwen3.5‑397B‑A17B, uses a sparse mixture‑of‑experts (MoE) architecture with 397B total parameters but only 17B active per token, which keeps inference cost relatively low while pushing performance close to or on par with frontier US models from OpenAI, Anthropic, and Google on many public benchmarks. Benchmarks in knowledge and reasoning areas like MMLU‑Pro, GPQA and instruction‑following tests such as IFBench show Qwen 3.5 matching or beating some current US models in specific tasks, although leadership still varies by metric.

The model is natively multimodal, with early fusion of text and vision, improved spatial reasoning, better OCR and document understanding, and support for videos up to roughly two hours, which enables use cases like GUI agents, video‑to‑code, and complex visual automation workflows. Qwen 3.5 also pushes hard on long‑context and agentic use: the cloud “Plus” variant exposes a 1M‑token context window with built‑in tools for web search and code execution, while reinforcement‑learning‑driven post‑training focuses on multi‑step agents that can plan, call tools and operate in realistic environments.

Compared with the earlier Qwen3 line, throughput has reportedly improved by up to about 8x for large workloads, and the base model’s knowledge and coding scores move into the same band as much larger systems like the 1T‑parameter Qwen3‑Max or leading closed Chinese competitors. On the ecosystem side, Alibaba continues to lean into open‑weights distribution via Hugging Face and ModelScope, and has expanded language coverage from 119 to 201 languages and dialects, which helps Qwen 3.5 function as a global open model standard rather than a China‑only stack. Media coverage highlights that this release tightens the gap with US leaders, especially in open‑source, even though some of the direct comparisons still use slightly earlier US model versions and real‑world deployment remains the key metric rather than download counts alone.

Overall, Qwen 3.5 positions Alibaba as one of the few players outside the US with a broadly competitive, multimodal, agent‑ready stack that is available both as open weights for local deployment and as a managed cloud service.

Key new features

- Native multimodal input and output across text, images, documents and long videos, with stronger OCR, spatial reasoning and video understanding than the previous generation.

- Hybrid MoE architecture (Gated DeltaNet plus sparse experts) that activates only about 17B parameters per token while maintaining 397B total, improving efficiency at near‑frontier capability.

- Up to 1M‑token context window in the hosted Plus version, enabling book‑scale or multi‑hour session reasoning and more persistent agent workflows.

- Expanded multilingual support to 201 languages and dialects, including many low‑resource tongues, and a larger 250k tokenizer vocabulary for faster encoding and decoding.

- Strong gains on agent benchmarks like BFCL‑V4, DeepPlanning, Tool‑Decathlon and BrowseComp through large‑scale reinforcement learning in multi‑tool environments.

- Open‑weight release on Hugging Face and ModelScope, with Alibaba positioning Qwen as a default open model family for developers who want both local and cloud options.

How much it closes the US gap

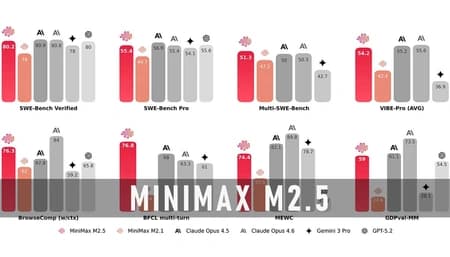

- Benchmarks published by Alibaba and secondary outlets suggest Qwen 3.5 now sits in roughly the same performance band as models like Gemini 3 Pro, Claude 4.5 and GPT‑5.2 on several reasoning, multilingual and instruction‑following suites, sometimes leading, sometimes trailing.

- The biggest advances are in instruction following and agent benchmarks, where Qwen 3.5 reportedly sets or matches state‑of‑the‑art among widely reported systems, which strengthens China’s position in the high‑end open‑source segment.

- At the same time, coverage stresses that many comparisons use earlier or specific variants of US models and that open‑model download metrics do not directly translate into production usage, so a full convergence with the US ecosystem cannot be assumed yet.