New

NewDeepSeek V4 vs Claude/GPT: Strengths, Controversies Ahead

DeepSeek V4 eyes early March drop, topping Claude/GPT in code benchmarks with 1M context, but Blackwell chip scandal brews US ire.

New

NewDeepSeek V4 eyes early March drop, topping Claude/GPT in code benchmarks with 1M context, but Blackwell chip scandal brews US ire.

Seedance 2.0 shows China’s AI video can rival OpenAI and Google, mixing realism with serious ethical and legal questions.

Alibaba’s Qwen 3.5 boosts multimodal and agent skills, narrowing the gap with US AI leaders while staying efficient through a sparse MoE design.

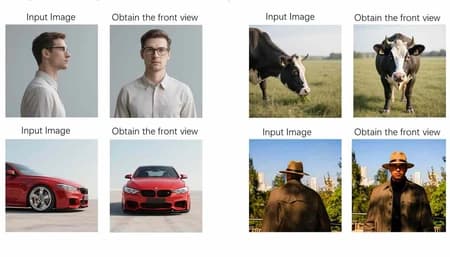

Qwen Image 2.0 unifies generation and editing with sharper 2K visuals, better humans and typography that finally makes text‑heavy designs workable.

Alibaba’s reasoning model pairs test‑time scaling with web search and code tools, topping Gemini 3 Pro and GPT‑5.2 on HLE search and math benchmarks.

Chinese open source AI like DeepSeek and Qwen is winning US clients by mixing solid performance with flexibility and lower operating costs.

The mHC idea lets DeepSeek train 3–27B models more efficiently, supporting its push to rival US labs while spending only a fraction on hardware.

Qwen-Image-2512 offers open source, Apache licensed image generation that challenges Google’s Nano Banana Pro for flexible, enterprise ready workflows.

Chinese coding models now sit close to Claude tier performance, while Doubao and Yuanbao prove domestic AI platforms can win huge mainstream audiences.

As enterprises discover capable open models they can run themselves, the pricing power of closed US platforms starts to look increasingly fragile.

Qwen-Image-Layered splits flat images into editable RGBA layers, enabling consistent, Photoshop-like edits with precise control over each element.

Z-Image-Turbo is a 6B param model by Alibaba for photorealistic, fast text-to-image generation with English and Chinese text support.

DeepSeek’s R1 cost ~$294K to train with 512 H800 GPUs and 80h. Inference costs are ~$0.55 input / $2.19 output per million tokens.

DeepSeek-V3 majorly upgrades from V2 with a massive 236B parameter count, a vastly larger 128K context window, and superior performance, all offered for free.

Alibaba is developing a new AI chip to reduce China's reliance on foreign technology like NVIDIA's, amid ongoing export controls and supply chain challenges.

Microsoft's open-source VibeVoice-1.5B AI can generate synthetic speech, threatening to disrupt the audiobook industry by reducing reliance on human narrators.

Qwen-Image-Edit adds precise bilingual text, semantic, and appearance editing via dual encoding accessible via Chat, API, Hugging Face, and open-source

Qwen-Image is a new 20B open-source model that integrates flawless bilingual text rendering with powerful image editing & generation capabilities.

China's Zhipu AI releases GLM-4.5 models, outperforming Claude 4 Sonnet in benchmarks, signaling China's open-source AI leadership.

Alibaba’s Qwen3‑Coder: 480 B MoE model (35 B active), agentic coding AI with massive context window, matches GPT‑4/Claude, fully open‑source.